Previous Research

Office of the Future

Office of the Future

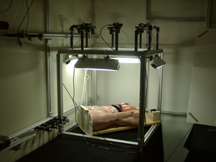

For some years we have been working on what we call the “Office of the Future”. This work is based on a unified application of computer vision and computer graphics in a system that combines and builds upon the notions of panoramic image display, tiled display systems, image-based modeling, and immersive environments. Our goals include a better everyday graphical display environment, and 3D tele-immersion capabilities that allow distant people to feel as though they are together in a shared office space.

Our basic idea is to use computer vision techniques, in real time, to dynamically extract per-pixel depth and reflectance information for the visible surfaces in the Office, such as walls, furniture, objects and people (see image below). Using this depth information one could then display (project) images on the surfaces, render images of captured models of the surfaces, or interpret changes in the surfaces. We are primarily concentrating on the display and capture aspects. Our approach to the former is to designate everyday, irregular surfaces in a person’s office to be part of a spatially immersive display, and then project high resolution graphics and text onto those surfaces. Our approach to the latter is to capture, reconstruct, and then transmit dynamic image-based models over a network for display at a remote site.

3D Telepresence for Medical Consultation

The project will develop and test 3D telepresence technologies that are permanent, portable and handheld in remote medical consultations involving an advising healthcare provider and a distant advisee. Advanced trauma life support and endotracheal intubation will be used initially in developing the system and in controlled experiments. These will compare the shared sense of presence offered by view dependent 3D telepresence technology to current 2D videoconferencing technology. The project will focus on barriers to 3D telepresence, including real time acquisition and novel view generation, network congestion and variability, and tracking and displays for producing accurate 3D depth cues and motion parallax. Once the effectiveness of the system in controlled conditions is established, future efforts would involve adapting the technology for use in a variety of clinical scenarios such as remote hospital to tertiary center emergency consultations, portable in transit diagnosis and stabilization systems, interoperative consultations and tumor boards. Quality of medical diagnosis and treatment will be used to assess the system as well as judgments concerning its acceptance and practicality by patients, physicians, nursing staff, technicians, and hospital administrators. Cost-effectiveness of 2D and 3D strategies will be analyzed.

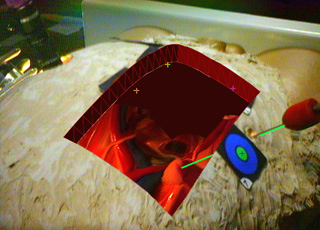

Ultrasound/Medical Augmented Reality Research

Our research group is working to develop and operate a system that allows a physician to see directly inside a patient, using augmented reality (AR). AR combines computer graphics with images of the real world. This project uses ultrasound echography imaging, laparoscopic range imaging, a video see-through head-mounted display (HMD), and a high-performance graphics computer to create live images that combine computer-generated imagery with the live video image of a patient. An AR system displaying live ultrasound data or laparoscopic range data in real time and properly registered to the part of the patient that is being scanned could be a powerful and intuitive tool that could be used to assist and to guide the physician during various types of ultrasound-guided and laparoscopic procedures.

High-Performance Graphics Architectures (Pixel-Planes & PixelFlow)

High-Performance Graphics Architectures (Pixel-Planes & PixelFlow)

Since 1980, we have been exploring computer architectures for 3D graphics that, compared to today’s systems, offer dramatically higher performance with wide flexibility for a broad range of applications. A major continuing motivation has been to provide useful systems for our researchers whose work in medical visualization, molecular modeling, and architectural design exploration requires graphics power far beyond that available in today’s commercial systems. Since there does not appear to be a sizable market for such “ultra-high-end” and therefore very expensive, systems, commercial vendors do not seem to be exploring even the possibilities there. We have thus been able to build systems that exhibit new levels of performance, explore sometimes revolutionary graphics architectures, demonstrate the feasibility of some ideas, and at the same time provide systems with which our local colleagues can perform research years before they would be able to conduct it if they had to rely on commercial systems. We are now focusing on image-based rendering, with the goal of designing and building a high- performance graphics engine that uses images as the principal rendering primitive. Browse our Web pages to learn more about our research, the history of the group, publications, etc.

Telecollaboration

Telecollaboration

Telecollaboration is unique among the Center’s reseach areas and driving applications in that it truly is a single multi-site, multi-disciplinary project. The goal has been to develop a distributed collaborative design and prototyping environment in which researchers at geographically distributed sites can work together in real time on common projects. The Center’s telecollaboration research embraces both desktop and immersive VR environments in a multi-faceted approach that includes:

– Systems software that provides a shared virtual environment.

– VR software that addresses the new issues which arise in collaborative VR environments.

– Modeling and interaction software that provides end-to-end, real time, telecollaborative product development support from the creation of rough sketches through parametric modeling to the creation of a finished product.

– Scene acquisition software that captures large, dynamically changing areas.

– Hardware that provides the wide field of view tracking needed by the scene acquisition software.

3D Laparoscopic Visualization

3D Laparoscopic Visualization

Despite centuries of development, most surgery today is in one respect the same as it was hundreds of years ago. Traditionally, the problem has been that surgeons must often cut through many layers of healthy tissue in order to reach the structure of interest, thereby inflicting significant damage to the healthy tissue. Recent technological developments have dramatically reduced the amount of unnecessary damage to the patient, by enabling the physician to visualize aspects of the patient’s anatomy and physiology without disrupting the intervening tissues. In particular, imaging methods such as CT, MRI, and ultrasound echography make possible the safe guidance of instruments through the body without direct sight by the physician. In addition, optical technologies such as laparoscopy allow surgeons to perform entire procedures with only minimal damage to the patient. However, these new techniques come at a cost to the surgeon, whose view of the patient is not as clear and whose ability to manipulate the instruments is diminished in comparison with traditional open surgery.

Emerging augmented reality (AR) technologies have the potential to bring the direct visualization advantage of open surgery back to its minimally invasive counterparts. They can augment the physician’s view of his surroundings with information gathered from imaging and optical sources, and can allow the physician to move arbitrarily around the patient while looking into the patient. A physician might be able, for example, to see the exact location of a lesion on a patient’s liver, in three-dimensions and within the patient, without making a single incision. A laparoscopic surgeon may be able to view the pneumoperitoneum from any angle merely by turning his head in that direction, without needing to physically adjust the endoscopic camera. Augmented reality may be able to free the surgeon from the technical limitations of his imaging and visualization equipment, thus recapturing the physical simplicity of open surgery. We are on a quest to see whether this new combination of fusing computer images with the real-world surroundings will be of use to the physician. We have worked since 1992 with AR visualization of ultrasound imagery. Our first systems were used for passive obstetrics examinations. We moved to ultrasound-guided needle biopsies and cyst aspirations in April of 1995. The third application for our system is laparoscopy visualization. We modified our ultrasound AR system to acquire and represent the additional information required for a laparoscopic view into the body.

Wide-Area Tracking

Wide-Area Tracking

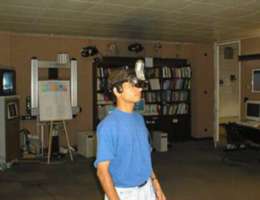

Head-mounted displays (HMDs) and head-tracked stereoscopic displays provide the user with the impression of being immersed in a simulated three-dimensional environment. To achieve this effect, the computer must constantly receive precise six-dimensional (6D) information about the position and orientation or pose of the user’s head, and must rapidly adjust the displayed image(s) to reflect the changing head locations. This pose information comes from a tracking system. We are working on wide-area systems for the 6D tracking of heads, limbs, and hand-held devices. In April, 1997 the UNC Tracker Research Group brought its latest wide-area ceiling tracker online, the HiBall Tracking System. The system (shown in images above) uses relatively inexpensive ceiling panels housing LEDs, a miniature camera cluster called a HiBall, and the single-constraint-at-a-time (SCAAT) algorithm which converts individual LED sightings into position and orientation data. The HiBall Tracker provides sub-millimeter position accuracy and resolution, and better than 2/100 of a degree of orientation accuracy and resolution, over a 500 square foot area. This is the largest tracking system of comparable accuracy. The HiBall Tracking System is being sold commercially at this time.

Image-Based Rendering

Image-Based Rendering

In the pursuit of photo-realism in conventional polygon-based computer graphics, models have become so complex that most of the polygons are smaller than one pixel in the final image. At the same time, graphics hardware systems at the very high end are becoming capable of rendering, at interactive rates, nearly as many triangles per frame as there are pixels on the screen. Formerly, when models were simple and the triangle primitives were large, the ability to specify large, connected regions with only three points was a considerable efficiency in storage and computation. Now that models contain nearly as many primitives as pixels in the final image, we should rethink the use of geometric primitives to describe complex environments. We are investigating an alternative approach that represents complex 3D environments with sets of images. These images include information describing the depth of each pixel along with the color and other properties. We have developed algorithms for processing these depth-enhanced images to produce new images from viewpoints that were not included in the original image set. Thus, using a finite set of source images, we can produce new images from arbitrary viewpoints.

Wide Area Visuals with Projectors

Wide Area Visuals with Projectors

This project looks to develop a robust multi-projector display and rendering system that is portable, and rapidly set up and deployed in a variety of geometrically complex display environments. Our goals include seamless geometric and photometric image projection, and continuous self-calibration using one or more pan-tilt-zoom cameras.

Basic Calibration Method

We use two pan-tilt-zoom cameras and adapt the calibration procedures developed by Sinha to geometrically calibrate an array of projectors and extract an accurate display surface model. This method is expected to achieve excellent panoramic registration and compensation for lens distortion in both the camera and projectors.

Rendering System

We built a PC-cluster rendering system that uses a 2-pass rendering algorithm running on either Chromium or UNC’s PixelFlex software framework.

Continuous Calibration using Imperceptible Structured Light

We embedded structured light patterns in normal color imagery so it is imperceptible to humans observing the display yet visible to a synchronized camera. The recovered structure light imagery is then used to continuously refine the calibration geometry while the system is in use, making the system more tolerant to changes in display geometry.